The Optimal Quantum of Temporal Decoupling

Contents

1. Introduction

This post is an extended and completely reworked version of our paper “The Optimal Quantum of Temporal Decoupling”, which I presented at the 29th Asia and South Pacific Design Automation Conference 2024. The preprint version of the paper can be downloaded here 🗎. A big “thank you” goes to Ruben for doing the hard work behind this paper.

The idea of this work is to shine a greater light on Temporal Decoupling (TD) in Electronic System Level (ESL) simulations. More specifically, we embarked on the quest to find and understand the optimal quantum. In contrast to the paper, this post focuses more on SystemC-based examples. Hence, some basic knowledge of SystemC is required to understand the rest of this post. For everything else, even including temporal decoupling, we provide some gentle introduction. This directly leads us to the first question:

2. What is Temporal Decoupling?

Temporal Decoupling (TD) is a modeling style that aims at speeding up (SystemC) simulations. The principles behind TD can best be explained by some minimal example.

Let’s suppose we want to model a very simple SoC comprising 2 CPUs. In terms of SystemC/C++, the system might look like this (download the cpp file here):

#include <iostream>

#include "systemc.h"

struct Cpu : public sc_module {

SC_HAS_PROCESS(Cpu);

void thread() {

while (true) {

// Do stuff...

std::cout << name() << ": " << sc_time_stamp() << std::endl;

wait(1, SC_NS);

}

}

Cpu(sc_module_name name) : sc_module(name) {

SC_THREAD(thread);

}

};

struct Soc : public sc_module {

SC_HAS_PROCESS(Soc);

Cpu cpu0, cpu1;

Soc(sc_module_name name) : sc_module(name), cpu0("cpu0"), cpu1("cpu1") {

}

};

int sc_main(int argc, char* argv[]) {

Soc soc("soc");

sc_start(10, SC_NS);

return 0;

}

As you can see, the two CPUs are repeatedly calling wait with a nanosecond delay in their thread, resulting in an effective clock speed of 1 GHz.

Usually, the “Do stuff…” part executes the current instruction of the CPU,

but for the sake of simplicity this is not modeled.

Thus, the example exhibits a typical SystemC loosely-timed (LT) style, in which each instruction executes in one cycle.

To see everything in action, execute the program above to get the following output:

soc.cpu0: 0 s

soc.cpu1: 0 s

soc.cpu0: 1 ns

soc.cpu1: 1 ns

soc.cpu0: 2 ns

soc.cpu1: 2 ns

[...]

The output also reveals that the SystemC kernel first executes the cycle of “cpu0”, while then executing the cycle of “cpu1”.

While there’s actually nothing wrong with this kind of modeling, the performance of the simulation might be somewhat disappointing.

Using this very simple example from above, I achieve at most 12 MIPS on my Intel i5-8265U (click here for a benchmark version).

For sure, it’s not the latest and greatest CPU, but 12 MIPS is nothing!

Especially, if you consider that the program doesn’t even do anything.

With other simulators, such as QEMU, I can easily crack 1000 MIPS.

I know, it’s a bold comparison, but I’ve heard people preferring QEMU-based simulations over SystemC-based simulations because “SystemC is so slow”.

And that leads us to very important question: Why is SystemC “so slow”?

Well, SystemC per se is not slow.

In the given example, it’s rather the frequent use of wait that cripples the simulation’s performance.

Because whenever wait is called, the SystemC kernel switches to the context of the other SC_THREAD.

While wait enables some kind of coroutine semantics, SystemC context switching comes at a relatively high price in terms of performance.

And this is where the idea of Temporal Decoupling (TD) begins.

Instead of doing a context switch for each cycle, we just let a CPU run for multiple cycles before switching to the other thread.

In other words: one CPU can run ahead of time, temporally decoupling it from the rest of the system.

Again, concepts are best explained by examples, so let’s look at the initial code, but now incorporating TD:

struct Cpu : public sc_module {

SC_HAS_PROCESS(Cpu);

tlm_utils::tlm_quantumkeeper qk;

void thread() {

while (true) {

if (qk.need_sync())

qk.sync();

// Do stuff..

std::cout << name() << " current time:" << qk.get_current_time() << std::endl;

qk.inc(sc_time(1, SC_NS));

}

}

Cpu(sc_module_name name) : sc_module(name) {

SC_THREAD(thread);

qk.reset();

}

};

struct Soc : public sc_module {

SC_HAS_PROCESS(Soc);

Cpu cpu0, cpu1;

Soc(sc_module_name name) : sc_module(name), cpu0("cpu0"), cpu1("cpu1") {

tlm_utils::tlm_quantumkeeper::set_global_quantum(sc_time(2, SC_NS));

}

};

int sc_main(int argc, char* argv[]) {

Soc soc("soc");

sc_start(6, SC_NS);

return 0;

}

Here, a few new things are introduced. First, there is:

tlm_utils::tlm_quantumkeeper::set_global_quantum(sc_time(2, SC_NS));

This static function sets the so-called quantum. The quantum is simply the maximum time a thread can run ahead of time. So, in the given example, a quantum of 2 nanoseconds allows the thread to simulate 2 cycles before switching to another thread. In the CPU threads, you now also find:

if (qk.need_sync())

qk.sync()

This simply checks if the thread has exhausted its quantum, and if so, syncs up with the rest of the system.

To advance the time, you don’t call wait anymore but qk.inc(sc_time(1, SC_NS)).

Ultimately, the TD simulation generates the following output:

soc.cpu1 current time:0 s

soc.cpu1 current time:1 ns

soc.cpu0 current time:0 s

soc.cpu0 current time:1 ns

soc.cpu1 current time:2 ns

soc.cpu1 current time:3 ns

soc.cpu0 current time:2 ns

soc.cpu0 current time:3 ns

...

As you can see, we now managed to cut the number of context switches in half with a quantum of 2 ns.

Using even higher quanta like 100 ns, the simulation speed could be increased to 120 MIPS on my computer!

That means, the SystemC simulation is now 10x faster than without TD!

This observation is in line with the SystemC language reference manual [1],

which also describes a potential speedup of up to 10x when using TD.

Ez pz, problem solved… you may think.

Well, as so often in life, there’s no free lunch, and unfortunately, this also applies to TD. Since some threads might advance into the future, we are changing the semantics of the simulation. This opens the door to a whole new universe of things that may go wrong and impact the functionality/accuracy of simulations. So, finding an “optimal” quantum that yields the best compromise between performance and accuracy is one of the key challenges when using TD. And that is where the story of this post begins!

3. The Story

As part of an industry project, my institute developed a faster version of the simulator gem5. We managed to speed up gem5 by more than 20x by employing some kind of parallel temporal decoupling. It’s basically the same principle as above, but instead of simulating the quanta one after another, we are doing everything in parallel. After a few months of development, we eventually shipped the first version of the simulator to our industry partner.

Much to our surprise, they said it didn’t work.

So, we had a joint debug session and eventually figured out the reason: the quantum was set to 1 second.

That’s a completely absurd value.

It’s like ordering water in a restaurant and suddenly the waiter starts to flood the restaurant.

In order to have a working simulation, you need quanta like 1µs or 10µs, not 1s.

But I guess it was my fault, as I told them to increase the quantum if they want to have more performance. I mean it’s not wrong, but I should also have told them that an increased quantum may impact accuracy or even functionality. Moreover, I could have just provided some example values.

So I thought, maybe there’s some literature that could explain the relation between quantum and accuracy more in detail. At that point, even we had little understanding and just chose our quanta by observation. Or in other words: the simulation is fast and doesn’t crash? That’s a good quantum. Well, every work I found provided the same fuzzy explanation and used the same empirical methods which we also employed. To give you some examples:

J. Engblom [2]:

“Time quantum lengths of 10k to 1M cycles are needed to maximize VP performance.

Most of the time, software functionality and correctness are unaffected by TD, and the default should be to use long time quanta.”

Ryckbosch et al. [3]: “We set the simulation window to 10ms and the simulation quantum to 100ms in all of our experiments. We experimentally evaluated different values for the simulation window and quantum, and we found the above values to be effective.”

J. Joy [4]: “Increasing the quantum can cause a thread to run for a longer time, thus reducing the context switching overhead. This increases the simulation speed, but at the cost of accuracy.”

Jünger et al. [5]: “To increase performance, the quantum should be as large as possible to reduce context switching. However, a large quantum reduces simulation accuracy, as events may be handled too late. Therefore, deploying TD is not trivial.”

Apparently, they all draw the same image of more quantum, more speed, but less accuracy:

However, a quantized relation is missing in all of the mentioned works. Sure, some of the works provide speedup/quantum graphs, but they rather stick to observations than explanations. Fortunately, for me as a Phd student, these kinds of unresolved mysteries offer the perfect opportunity to write a paper. So, in the next few subsections, I’ll try to bring some light into the darkness by using analytical models to describe speedup and accuracy.

4. Analytical Models

Analytical models are a popular approach in computer science/engineering to describe a complex systems by simple mathematical means. Some famous examples include: Amdahl’s law [6], Gustaffson’s law [7], or the Roofline model [8]. Often the goal is not to describe something 100% accurately, but to find a parsimonious yet evocative model. Or in the words of George Box: “All models are wrong, but some are useful”. With a similar thought in mind, the following subsections introduce analytical models for performance and accuracy prediction in temporally-decoupled simulations.

4.1 A Speedup Model

In this subsection, a speedup model for TD simulations is introduced.

As already mentioned before, the speedup of a TD simulation is attained by reducing the number of the simulator’s context switches.

Thus, for an ideal simulation without any context switches,

the execution time ($T_{ideal}$) is simply given by the sum of the time of all simulation segments $T_i$:

Or in mathematical terms:

\begin{equation} \label{eq:6}

T_{ideal} = \sum_{i=1}^{K} T_ {i}

\end{equation}

Practically, there are context switches (CS) between the individual simulation segments leading to a prolongued execution time $T_{real}$:

This can be modelled by an abstract, relative overhead $O_c$

\begin{equation} \label{eq:7}

T_{real} = T_{ideal} \cdot (1 + O_c)

\end{equation}

This overhead is almost inversely proportional to the chosen quantum ($t_{\Delta q}$). Because if we double the quantum, we almost halve the number of context switches. Note it’s “almost” because of the process at the end, which doesn’t really have a context switch. Since most real-world simulations have way more than just a handful of context switches, this last missing context switch is negligible. We’re also assuming that the quantum is larger than the average event distance. For example, using quanta below 1 ns for a CPU system with a 1 ns clock cycle wouldn’t result in any changes. But again, for most real-world scenarios this assumption should hold valid.

Using an inverse relation between quantum and overhead, the resulting formula is:

\begin{equation} \label{eq:8} T_{real} = T_{ideal} \cdot \left(1 + \frac{O_c’}{t_{\Delta q}} \right) \end{equation}

Now we are left with an overhead factor $O_c’$. This factor can be determined by curve fitting or running two reference simulations. For the latter the formula is:

\begin{equation} \label{eq:9} \begin{split} \frac{T_{real}(t_{\Delta q1})}{T_{real}(t_{\Delta q2})} = \frac{1 + \frac{O’}{t_{\Delta q1}}}{1 + \frac{O’}{t_{\Delta q2}}} \Rightarrow O_c’ = \frac{T(t_{\Delta q_1}) - T(t_{\Delta q_2})}{\frac{T(t_{\Delta q_2})}{t_{\Delta q_1}} - \frac{T(t_{\Delta q_1})}{t_{\Delta q_2}} } \end{split} \end{equation}

To accurately determine the factor $O_c’$, we recommend choosing low quanta, for which the context switching time is a significant fraction of the total simulation time. This overhead factor also has meaning. For example, a factor $O_c’ = 15 ns$ implies that at a quantum of 15 ns half of the execution time is spent in context switching.

Ultimately, the speedup can be formulated as:

\begin{equation} \label{eq:10}

S(t_{\Delta q}) = \frac{T_{ideal}}{T_{real}} = \frac{t_{\Delta q}}{t_{\Delta q} + O_c’}

\end{equation}

Note that this equation always yields values smaller than 1.

We chose this design for several reasons.

First, the goal of TD is to reduce the number of context switches, which is just a performance-degrading environmental effect.

Hence, TD doesn’t really make simulations faster, but it prevents them from being slowed down.

Second, with this representation, it is very easy to see, how close you are to the theoretical optimum.

For example, if the speedup is already at 0.99, increasing the quantum will not yield in any significant performance increases.

To already provide a visual impression of the model, I decided to run an experiment with the system from the 2. What is Temporal Decoupling? section.

In the given graph, the model’s prediction is depicted in orange, while the measurement is represented by the blue line. Using the formula approach, an overhead factor of $O_c’ = 10.95ns$ was determined. If you want to conduct this experiment on your own, feel free to use the benchmark and the corresponding python script for the graph. More extensive experiments are presented in Section 5.1 Speedup/Accuracy Experiments. In the next subsection, the second important aspect of TD is discussed: accuracy.

4.2 An Accuracy Model

While the aspect of speedup was very clearly defined, the term “accuracy” (or “inaccuracy”) can be understood in multiple ways. First of all, “accuracy” can be categorized into qualitative and quantitative aspects.

Qualitative inaccuracy includes all effects that can hardly be expressed as a metric and lead to changed simulation semantics. For example, if TD leads to the crash of a program, you observed qualitative inaccuracy.

Quantitative accuracy, on the other hand, is something that can be meaningfully captured in numbers. For example, it can be the accuracy of interrupt timings, cache hit rates, memory bandwidth, simulation time, etc. Since some simulations offer hundreds of simulation statistics, the question arises of which one to pick. For our model and experiments, we only chose the target simulation time as a representative measure of accuracy. This statistic is present in all SystemC simulations and it may capture the influence of various other factors. Ultimately, a simulation user must individually consider which particular simulation statistics are relevant.

As before, we tried to develop an analytical model to predict and understand accuracy. Of course this model is limited to quantitative accuracy, because the point of qualitative accuracy is its non-numerical nature. We’re also only modeling target simulation time for the aforementioned reasons. So, the first step in the model design was to think about, which situation in TD could lead to a changed target simulation time, Well, there are actually a few situations with different outcomes, but we thought that the most prevalent one is process communication. In practice this covers cases like two target CPUs communicating over shared memory. Let’s stick to this example an take a look at the following visulization:

In the given example, Process 2 wants to send a message to Process 1. For the bidirectional case, Process 2 also expects a response from Process 1. The numbers in the white circles indicate the order in which the processes were executed as this leads to different outcomes. The example also assumes that Process 2 starts with the communication in the middle of its quantum. Let’s dissect the individual cases one by one to get a better understanding.

For unidirectional communication, there are 2 subcases: Process 2 gets executed first, leading to Process 1 receiving the message $t_{\Delta q}/2$ earlier compared to a non-TD simulation. In the vice versa case, the message is received later by $t_{\Delta q}/2$. If both cases are assumed to be equally likely, there should be no change in target simulation on average. One may argue about the different semantical impacts of receiving data later or earlier, but let’s not overcomplicate things and head to the next case.

For bidirectional communication, there are 3 different subcases: Process 2 first, then Process 1 leads to a delay of $t_{\Delta q}/2$. Process 1 first, Process 2 second and third, Process 1 fourth, leads to a delay of $3t_{\Delta q}/2$. Process 1 first, Process 2 second, Process 3 third, Process 4 fourth, leads to a delay of $t_{\Delta q}/2$. As you can see, all cases lead to a prolongued communication, which ultimately may lead to a prolongued target simulation time if the communication was on the program’s critical path, We can also see, that that this extended time depends linearly on the quantum. So far the model assumed a communication in the middle of a quantum, which may be a little bit too simple. To make it more accurate we modeled communications as randomly occurring events, leading us to the Poisson distribution. The average incured prolonguation time per quantum (Case 1 and Case 3) can then be calculated as follows:

\begin{equation} \label{eq10}

\begin{split}

t_d & = t_{\Delta q} - E(X | X \leq t_{\Delta q}) P(X < t_{\Delta q}) - t_{\Delta q} P(X > t_{\Delta q}) \\\

& = t_{\Delta q} - \int_{0}^{t_{\Delta q}} rt e^{-r t} \,dt - \int_{t_{\Delta q}}^{\infty} rt_{\Delta q} e^{-r t} \,dt \\\

%& = t_{\Delta q} - (r t_{\Delta q} e^{-r t_{\Delta q}}) + rt_{\Delta q} e^{-t_{\Delta q} t} \,dt \\\

& = t_{\Delta q} - \frac{1 - e^{-r t_{\Delta q}}}{r} \\\

& = t_{\Delta q} - (1 - e^{-r t_{\Delta q}})/r

\end{split}

\end{equation}

This results in the relative timing inaccuracy of:

\begin{equation} \label{eq11}

I = \frac{t_{\Delta q}}{t_{\Delta q} - t_d} - 1 = \frac{r \cdot t_{\Delta q}}{1 - e^{-r t_{\Delta q}}} - 1 \approx r \cdot t_{\Delta q}

\end{equation}

With $r$ being the rate of cross-scheduled events per time unit.

The result is a hockey stick curve, which can be approximated by a simple linear curve (note that Case 2 yields a similar result).

This linear curve is in stark contrast to the sigmoidal speedup model.

While the attainable speedup eventually saturates, the inaccuracy continues to increase indefinitely.

This underpins why the choice of the optimal quantum is so essential.

Without specifying the linear factor in particular, the inaccuracy equation can also be written as: \begin{equation} \label{eq12} I = \alpha \cdot t_{\Delta q} \end{equation} The factor $\alpha$ can be determined by two reference simulations or curve fitting.

5. Practical Assesment

To see whether the model can stand the test of practice, running some simulations is a neccessity. All following simulations were executed on an AMD Ryzen 3990x (64 physical cores/128 logical cores) host system.

5.1 Speedup/Accuracy Experiments

This is currently under construction.

5.2 Qualitative Accuracy

Now to one of my favorite subsection: qualitative accurracy.

As already mentioned, this concerns all effects, which change the semantics of the simulation and can hardly be captured in numbers.

That means, without TD a simulation did A and with TD it suddenly does B.

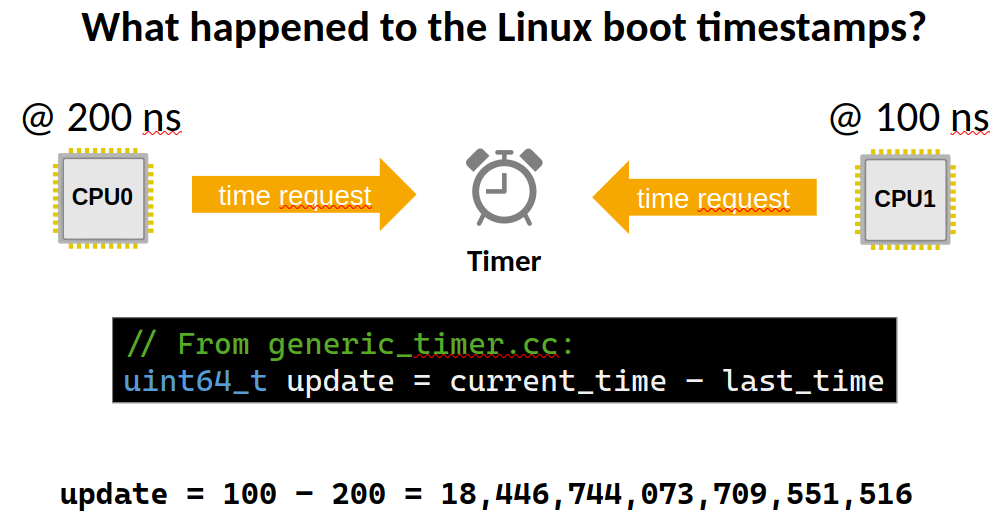

To start with a tangible example, take a look at the following Linux boot timestamps that we obtained from default gem5 and our proprietary version with TD:

In the TD simulation, the timestamps suddenly jump to extremely high numbers, which are also occasionally jumping back in time.

Obviously, something went wrong here, with TD probably being the culprit. But what exactly happened?

After spending way too much time debugging, we ultimately found the problem

in gem5’s implementation of the ARM virtual count CNTVCT_EL0 register.

This register holds an increasing count value, which is later used by Linux to derive the timestamps.

When fetching the register, the current value is calculated by the time difference between the current and the last access.

However, in TD simulations some simulation threads can run ahead of time.

That means the last access may have a higher timestamp, resulting in a negative delta.

Since gem5 stores this delta in an unsigned integer, exploding values are the consequence.

Or to summarize this in a slide from my ASP-DAC presentation:

The solution for this problem is quite simple: restrict deltas to be greater than zero.

After that, we were finally able to boot Linux using temporally-decoupled gem5.

Interestingly, J. Engblom [12] observed the same issue completely independent of ours.

He also proposes a restriction to deltas greater than or equal to zero as a solution.

The second type of observed error arises from delayed communication between simulation objects.

As previously explained, events or messages from one process to another may only become apparent at the beginning of a quantum.

This leads to a communication latency that grows quasi-proportionally with the quantum.

This communication latency could also be oberserved when executing a multi-threaded NPB benchmark with AVP64 [9],

where the synchronization of threads was delayed by TD.

Well, in theory this delay was avoidable, because thread synchronization is usually achieved by putting a waiting CPU into a low-power state.

For ARM this could be a WFI instruction.

Whenever the simulation encounters such an instruction, it could terminate the quantum early to increase performance and accuracy.

Unfortunately, due to a bug in AVP64, the WFI instruction was executed as NOP.

Note that such a behavior is actually allowed according to the ARM reference manual manual, which is why WFI instructions are usually guarded by spin loop executing NOPs.

For large quanta, this leads to an interesting effect: The total number of instructions executed increases, causing the speedup measured in host execution time to decrease.

However, the speedup of the simulator measured in MIPS stagnates or even increases since NOPs are easy to simulate.

As shown in the following figures, first effects are already visible at $t_{\Delta q}>1ms$:

At $t_{\Delta q}>100ms$, more than half of the time is spent in spin loops.

To conclude, if someone is selling a simulator that can achieve a lot of MIPS, it may actually be executing NOPs.

In addition to the effects on simulation performance, throughput or functionality of peripherals can also be affected by delayed communication.

As an example, we executed the iperf3 [13] benchmark in avp64 with the VP as a server and the host system as a client.

In our configuration, the benchmark determines the maximum throughput of a TCP-based connection between a server and a client.

As shown in the following figure, the throughput rapidly decreases from 2690 Mbit/s at $t_{\Delta q}=1µs$ to 77 MBit/s at $t_{\Delta q}=100µs$:

This performance drop can be explained by the implementation of the OpenCores Ethernet device ETHOC [14], which is used in avp64.

The device uses one thread each for sending and receiving Ethernet frames, and each of these threads is executed only once per quantum.

Thus, only one Ethernet frame can be received per quantum, which limits the maximum achievable throughput.

Ultimately, this can affect the data rate to such an extent that timeouts of the network driver watchdog occur.

The choice to send/receive only one packet per quantum is probably due to the fact that TD was not properly taken into account during the device implementation.

It would be more accurate to calculate the number of packets to be processed once per quantum based on the elapsed time.

Since the respective thread is still activated once per quantum, there would be no performance loss.

With this explanation, a steadily decreasing throughput would be expected, but we saw that the value stagnates from a quantum of 100µs. The explanation for this can be found in the Linux’s NAPI which is responsible for interrupt handling of network devices. When the system receives an Ethernet frame, an interrupt is generated, which leads to the execution of an Interrupt Service Routine (ISR) as in most systems. However, since network connections can transfer considerable amounts of data, the resulting interrupts can have a significant impact on the performance of the system. Therefore, after receiving an interrupt, NAPI masks the corresponding interrupt and switches to a poll mode for a certain time, waiting for more packets to accumulate. Only after a certain time has elapsed, it switches back to interrupt mode and a WFI instruction is executed. If implemented correctly, the execution of a WFI instruction leads to an early termination of the quantum, allowing the reception of the next of a next Ethernet frame.

6. Conclusion

- More quantum, more speed, less accuracy

- Diminshing performance returns

- Inaccuracy grows linearly

- Temporal decoupliing may break your simulation

- Many ways

- Temporal decoupling aware design

- gem5 timer fix

- Ethernet adapter fix

7. Related Work

“Related Work” section at the end as the motivation of this paper was a lack of related work. Anyway, here’s a list of works/website, which I consider related our paper:

What is temporal decoupling?

Interesting works about temporal decoupling (from relevant ot less relevant)

- Evaluating Temporal Decoupling in a Virtual Platform, Jinju Joy, 2020

- Some Notes on Temporal Decoupling, Jakob Engblom, 2022 [12]

- Temporal Decoupling – Are “Fast” and “Correct” Mutually Exclusive?, Jakob Engblom, 2018 [2]

- Optimizing Temporal Decoupling using Event Relevance, Jünger et al., 2021, [15]

- Temporal decoupling with error-bounded predictive quantum control, Glaser et al., 2015 [16]

- Speculative Temporal Decoupling Using fork(), Jung et al., 2019, [17]

- Efficient Parallel Transaction Level Simulation by Exploiting Temporal Decoupling, Khaligh et al., 2009 [18]

Analytical models and computer simulation

- Cost/Performance of a Parallel Computer Simulator, Falsafi et al., 1994, [19]

- A Comparison of Two Approaches to Parallel Simulation of Multiprocessors, Over et al., 2007, [20]

8. References

- [1]“IEEE Standard for Standard SystemC Language Reference Manual,” IEEE Std 1666-2011 (Revision of IEEE Std 1666-2005), 2012, doi: 10.1109/IEEESTD.2012.6134619.

- [2]J. Engblom, “Temporal Decoupling-Are ‘Fast’and ‘Correct’Mutually Exclusive?,” in DVCon Europe, 2018.

- [3]F. Ryckbosch, S. Polfliet, and L. Eeckhout, “VSim: Simulating Multi-Server Setups at near Native Hardware Speed,” ACM Trans. Archit. Code Optim., Jan. 2012.

- [4]J. Joy, “Evaluating Temporal Decoupling in a Virtual Platform.” 2020 [Online]. Available at: https://www.diva-portal.org/smash/get/diva2:1530379/FULLTEXT01.pdf

- [5]L. Jünger, A. Belke, and R. Leupers, “Software-defined Temporal Decoupling in Virtual Platforms,” in 2021 IEEE 34th International System-on-Chip Conference (SOCC), 2021, pp. 40–45, doi: 10.1109/SOCC52499.2021.9739242.

- [6]G. M. Amdahl, “Validity of the Single Processor Approach to Achieving Large Scale Computing Capabilities,” in Proceedings of the April 18-20, 1967, Spring Joint Computer Conference, 1967.

- [7]J. L. Gustafson, “Reevaluating Amdahl’s Law,” Commun. ACM, vol. 31, no. 5, 1988.

- [8]S. Williams, A. Waterman, and D. Patterson, “Roofline: An Insightful Visual Performance Model for Multicore Architectures,” Commun. ACM, vol. 52, no. 4, Apr. 2009.

- [9]“ARMv8 Virtual Platform (AVP64).” [Online]. Available at: https://github.com/aut0/avp64

- [10]L. Jünger, J. H. Weinstock, and R. Leupers, “SIM-V: Fast, Parallel RISC-V Simulation for Rapid Software Verification,” DVCON Europe, 2022.

- [11]F. Bellard, “QEMU, a Fast and Portable Dynamic Translator.,” 2005, pp. 41–46.

- [12]J. Engblom, “Some Notes on Temporal Decoupling.” 2022 [Online]. Available at: https://jakob.engbloms.se/archives/3467

- [13]“iperf3 benchmark.” [Online]. Available at: https://software.es.net/iperf/

- [14]“OpenCores Ethernet MAC 10/100 Mbps.” [Online]. Available at: https://opencores.org/projects/ethmac

- [15]L. Jünger, C. Bianco, K. Niederholtmeyer, D. Petras, and R. Leupers, “Optimizing Temporal Decoupling using Event Relevance,” in ASP-DAC, 2021.

- [16]G. Glaser, G. Nitsche, and E. Hennig, “Temporal decoupling with error-bounded predictive quantum control,” in FDL, 2015.

- [17]M. Jung, F. Schnicke, M. Damm, T. Kuhn, and N. Wehn, “Speculative Temporal Decoupling Using fork(),” in DATE, 2019, doi: 10.23919/DATE.2019.8714823.

- [18]R. Salimi Khaligh and M. Radetzki, “Efficient Parallel Transaction Level Simulation by Exploiting Temporal Decoupling,” in Analysis, Architectures and Modelling of Embedded Systems, Berlin, Heidelberg, 2009, pp. 149–158.

- [19]B. Falsafi and D. A. Wood, “Cost/Performance of a Parallel Computer Simulator,” in Proceedings of the Eighth Workshop on Parallel and Distributed Simulation, 1994.

- [20]A. Over, B. Clarke, and P. Strazdins, “A Comparison of Two Approaches to Parallel Simulation of Multiprocessors,” Performance Analysis of Systems and Software, IEEE International Symmposium on, vol. 0, pp. 12–22, Apr. 2007, doi: 10.1109/ISPASS.2007.363732.